Meta recently announced the “Llama 3.2” model series on September 25, 2024. Surprisingly, the lightweight 3B parameter model from this series can run on smartphones, including iPhones and Android devices.

Recent advancements in open-source LLMs, combined with their increasing efficiency and the improved specs of smartphones, have made it possible to run AI models in local environments.

This article will guide you through using “PocketPal,” an iOS/Android app, to experience a local LLM on your iPhone. Even if you’re using an older iPhone model that can’t access Apple’s native AI, “Apple Intelligence,” you can still explore the potential of local LLMs.

“PocketPal”: Running Local LLMs on Your Smartphone

“PocketPal” is an app that allows you to download and run open-source AI models like Meta’s Llama, Google’s Gemma, and Microsoft’s Phi on your smartphone. You can chat with AI, similar to ChatGPT, even without an internet connection.

Since it doesn’t transmit any information online, it offers significantly better privacy and security compared to services like ChatGPT or Claude. This makes it particularly useful for handling sensitive information, such as emails containing personal data.

Best of all, PocketPal is available for both iOS and Android, and it’s completely free to use.

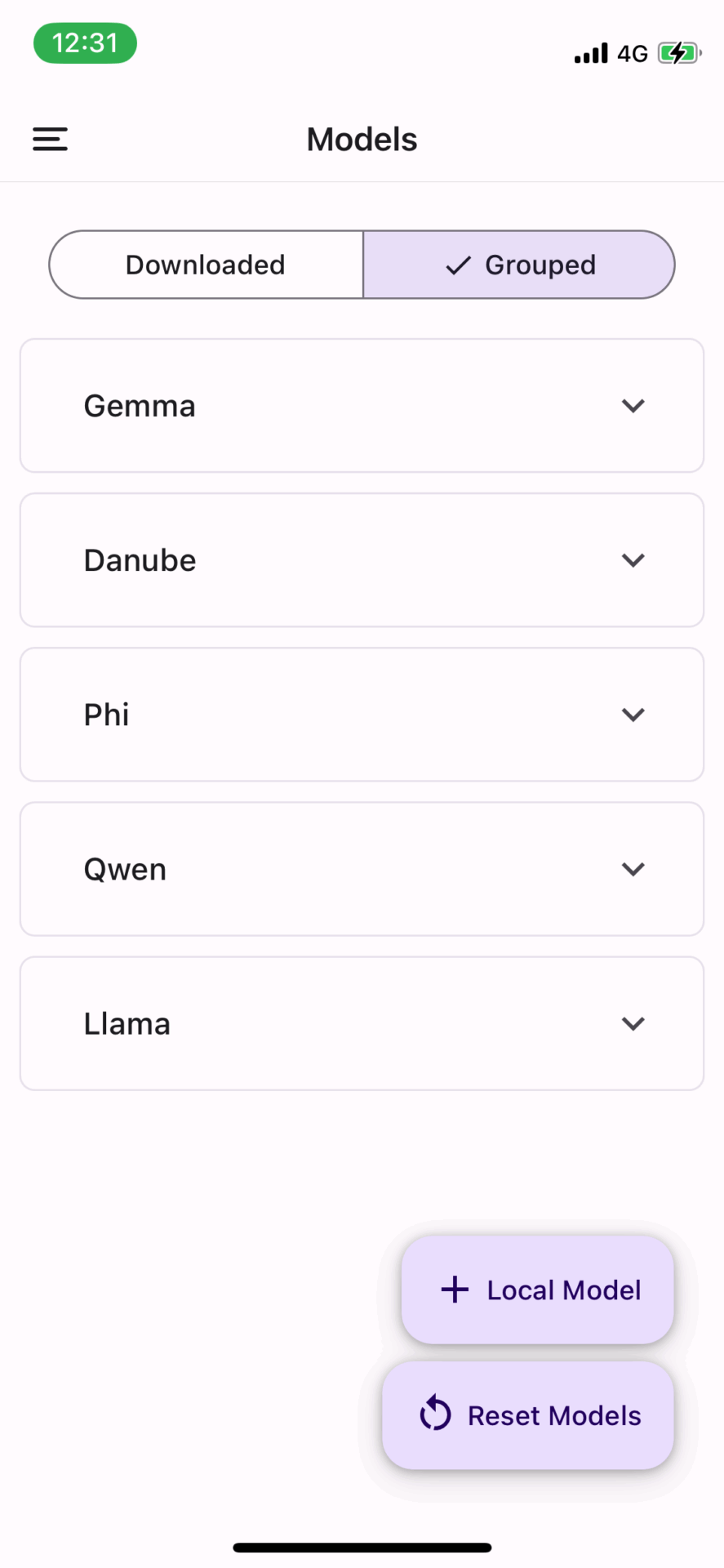

AI Models Available on “PocketPal”

As of October 9, 2024, PocketPal offers five open-source LLMs, along with their smaller variants:

Each model series includes lightweight versions with up to about 2GB in size and 3 billion parameters:

- Gemma 2 2B

- Danube 3

- Phi 3.5 mini

- Qwen 2.5 3B

- Llama 3.2 3B

The lineup includes a wide range of major open-source LLMs, from Alibaba’s Qwen to H2O.ai‘s Danube.

To use a model, simply select it and click “Download” to save it on your iPhone. It’s also possible to load models stored on your device, suggesting that users might be able to add newer, lightweight LLMs in the future.

How to Chat with Llama 3.2 3B on Your Smartphone

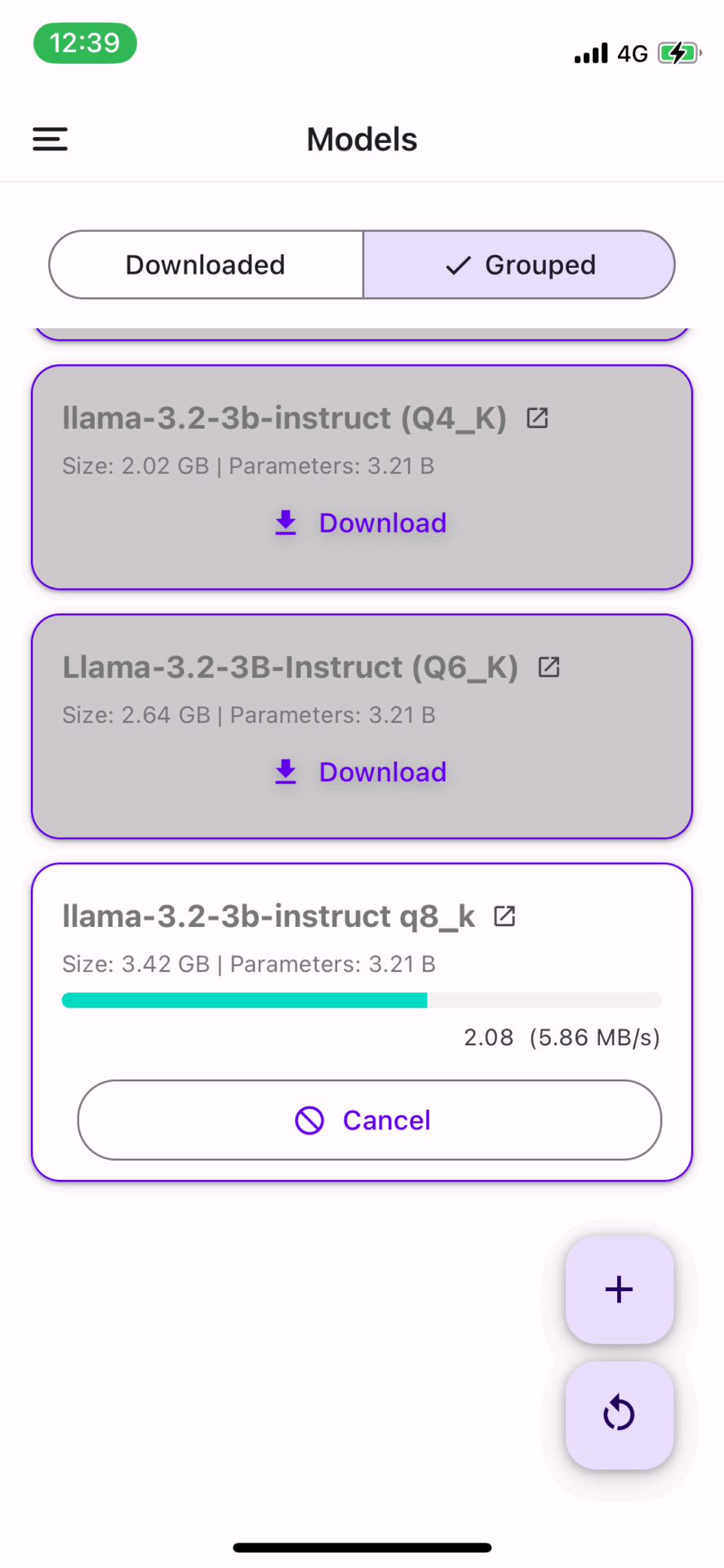

For this demonstration, I downloaded Llama 3.2 3B using PocketPal.

Although the model size is relatively large at 3.42GB, it took only about 10 minutes to download over a mobile network, indicating decent server speeds.

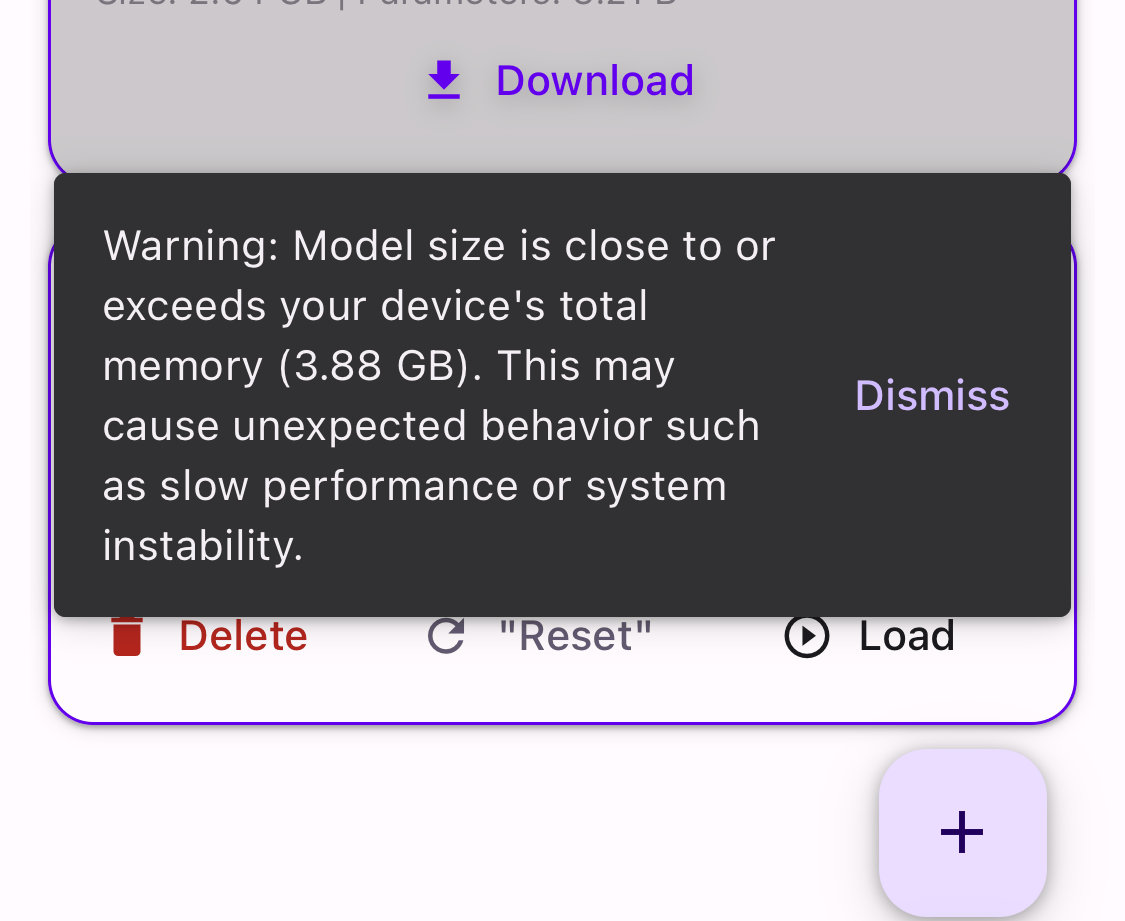

However, on my iPhone 12 mini, the model size was close to the device’s memory capacity, triggering a warning message.

While it did work, the performance was slow and choppy. For older iPhones, it might be better to try lighter models like Phi 3.5 mini.

High-spec Android smartphones with 8GB or more RAM would likely offer faster and smoother performance.

It seems we’re approaching an era where the ability to run local LLMs might become a crucial factor when comparing smartphone specs for purchase.

Can Llama 3.2 Run on an iPhone 12 mini?

Despite using a four-generation-old smartphone (iPhone 12 mini), I was able to get responses from the Llama 3.2 3B model.

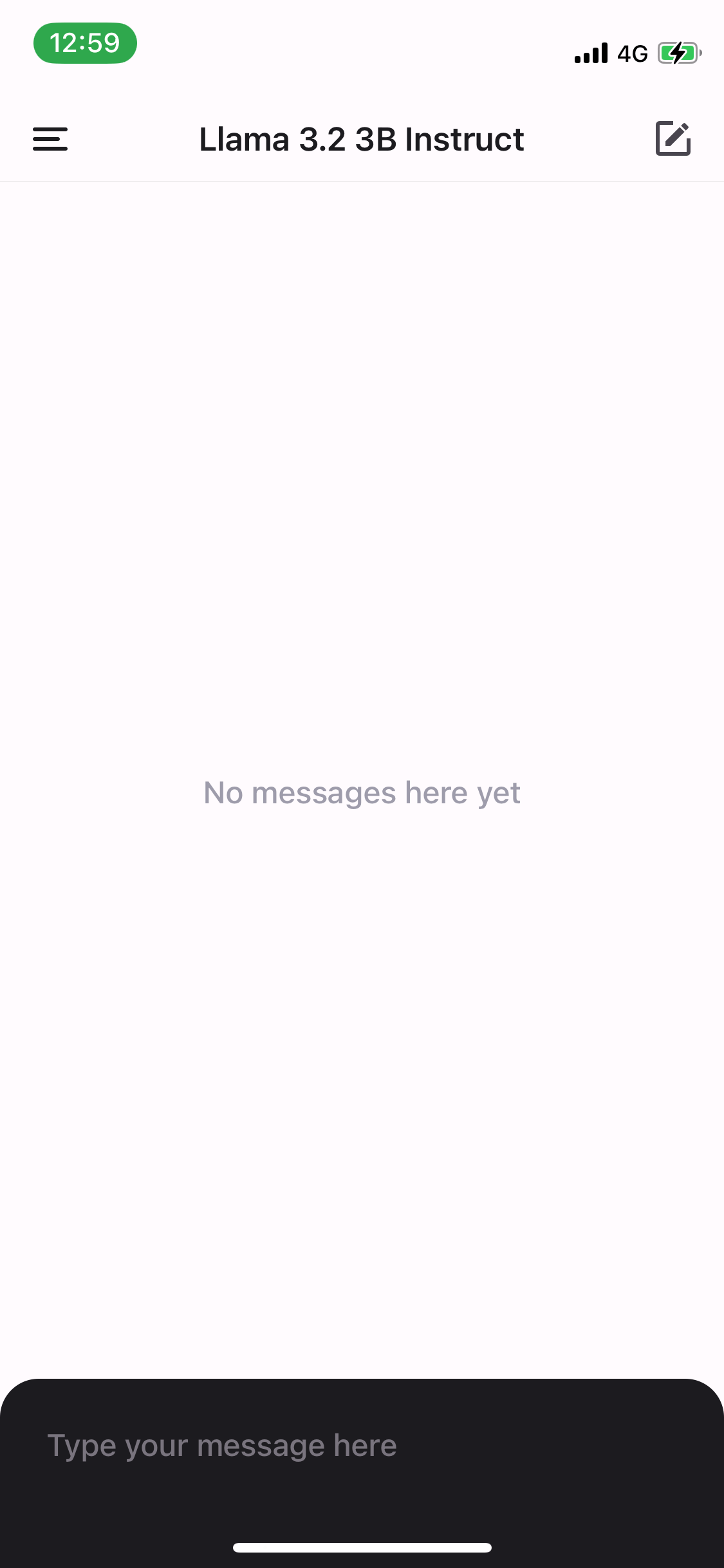

After downloading Llama 3.2 3B in PocketPal, pressing the “Load” button loads Llama into the chat interface.

You can type your questions in the “Type your message here” field, and the Llama 3.2 3B model will process them locally on your iPhone, providing answers even in a completely offline environment.

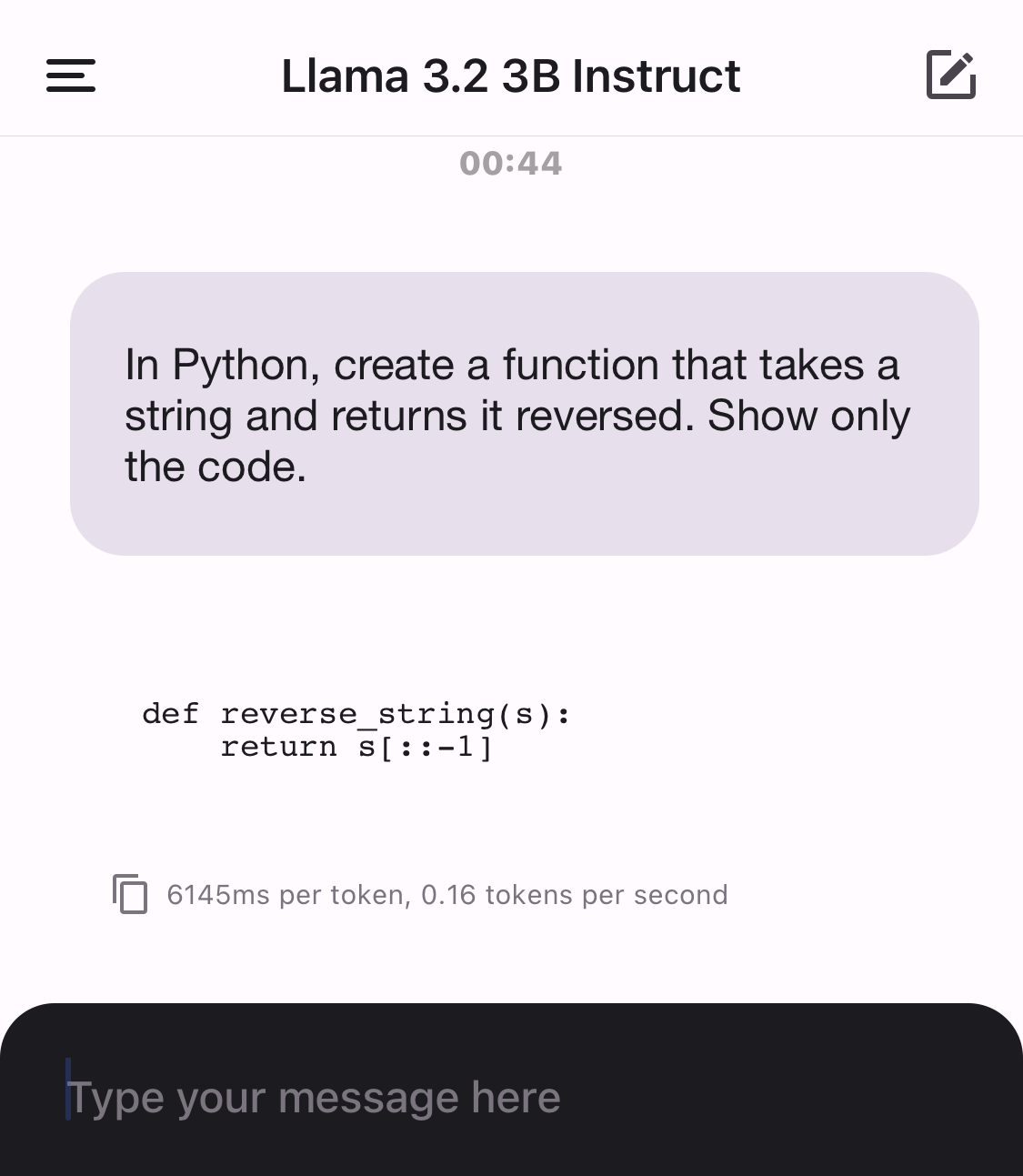

I tested it with the following prompt to write a simple Python function:

In Python, create a function that takes a string and returns it reversed. Show only the code.The model slowly but surely generated the code:

Although it was a very short code snippet, it took about 1-2 minutes to generate.

Understandably, running even a lightweight AI model on a smartphone with limited memory and low specs takes time to generate responses.

Using newer models like iPhone 16, iPhone 15, or high-performance devices like iPad Pro would likely offer more practical speeds.

Nevertheless, it’s astonishing to see a large language model with 3 billion parameters running smoothly on an iPhone, showcasing the rapid pace of technological innovation.

As new model series are frequently released amid fierce competition between Meta, Microsoft, and Google, we might soon enter an era where AI assistants capable of handling various tasks become available on our smartphones, especially if the accuracy of small, lightweight models continues to improve.

The Potential of Smartphone-Compatible Local LLMs

Running lightweight models like Llama 3.2 3B on smartphones allows for processing sensitive information without sending it to external servers, keeping everything contained within the device.

While current smartphone specs might not be sufficient for generating long texts, there are numerous simple yet crucial tasks where local LLMs could excel, such as identifying phone numbers, translating short messages, or suggesting schedules from calendars.

As smartphone specs continue to improve, we might soon be able to run larger 8B models, not just 3B versions.

PocketPal is an innovative app that makes advanced AI technology accessible to everyone, anytime, anywhere. It offers a glimpse into how AI could integrate into users’ daily lives.

With rumors of Apple Intelligence launching in October, we eagerly anticipate future app updates and further developments in local LLMs.